Introduction

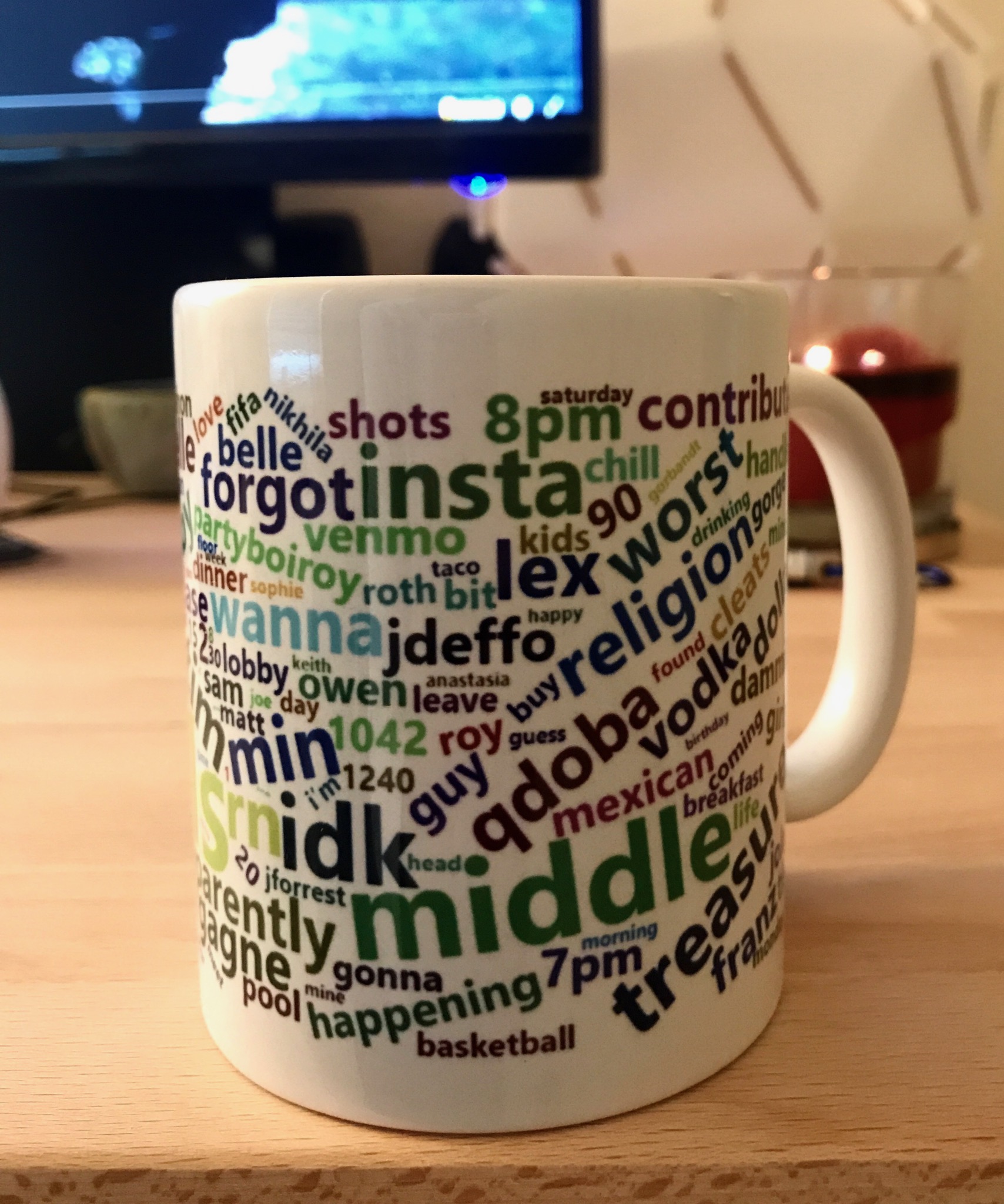

This year my friends took part in a Secret Santa mug exchange. Determined to not be outdone, I decided to create a completely personalized mug, featuring a word cloud of all the words my giftee wrote in a GroupMe chat during our internship together. I’ll dive into how to do thi using the GroupMe API, but if you use a different method to get your words you can still follow steps 2 and 3 to create and export your word cloud for whatever personalized item you want to put it on.

Step 1: Get the Data

As usual, the first step is to retrieve all the relevant data. My friends all used GroupMe to communicate so I’ll be using their API to get our group messages. In the GroupMe API, you can view messages from anyone in a group you’re in. However, I’ll only be including messages I’ve written in this post to preserve all my friends’ privacy.

First we have to include the libraries in R.

Libraries

library(rvest) # parse html

library(stringr) #string functions

library(jsonlite) # parse json

library(tidyverse) # make R fun

library(lubridate) # parse dates

library(tidytext) # text functions

library(httr) # api requestsAPI Key

Before we can get any data from the GroupMe API, we’ll need to get an API key. Log into your GroupMe account at dev.groupme.com. In the menu bar at the top right you will see an access token menu item.

GroupMe Access Token Menu

Click the menu item to get your key and save it as a variable in your R project.

groupme.api.key <- "iamanapikey"Query the Data

The GroupMe API separates messaging calls from group calls. You can specify the group(s) you want messages from using group IDs, which will allow you to use the messaging API to get messages from the group(s).

First, I’ll get a list of all my groups.

connection.url <- 'https://api.groupme.com/v3/groups'

groups.query.response <- GET(connection.url,

query = list(

token = groupme.api.key

))

parsed.group.response <- content(groups.query.response, "parsed")You can explore the parsed.group.response to find the group you need if you’re only looking for one group’s messages. Instead, I’m going to get every message from all my groups using a list of the group IDs.

group.ids <- lapply(parsed.group.response$response,

function(x){ x$group})Test Getting Messages

Before trying to get every message from every group, we should test out the API with a single group.

Get your group ID.

test.group.id <- group.ids[1]Query a group for messages.

#/groups/:group_id/messages

connection.url <- paste0("https://api.groupme.com/v3/groups/",

test.group.id,

"/messages")

query.response <- GET(connection.url,

query = list(

token = groupme.api.key

))

parsed.data <- content(query.response, "parsed")I’ll use lapply to get the fields I want out of the message.

messages <- lapply(

parsed.data$response$messages,

function(x){

as.vector(x[c("sender_id",

"name",

"text")])

}

)View the messages to see messages people wrote in that group.

head(messages)When converting to a vector we lose our null values, which makes it hard to get the messages into a dataframe format and create a word cloud. To get around that issue I am using a function to convert any null items to NA.

get.non.null <- function(item){

if(is.null(item)){

return(NA)

}

else{

return(item)

}

}Now we can convert our sample into a dataframe using the get.non.null function.

message.df <- data.frame("id"= c(NA),

"name" = c(NA),

"text" = c(NA),

stringsAsFactors = FALSE)

for(message in messages){

message.vector <- c(get.non.null(message$sender_id[1]),

get.non.null(message$name[1]),

get.non.null(message$text[1]))

# print(message.vector)

message.df <- rbind(message.df,message.vector)

}summary(message.df)The GroupMe API also limits how many messages you can query at a time (default is 20). To get all of the messages from a single group we have to query multiple times.

while(query.response$status_code == 200){

parsed.data <- content(query.response, "parsed")

messages <- lapply(parsed.data$response$messages,

function(x){x[c("id",

"sender_id",

"name",

"text")]})

for(message in messages){

message.vector <- c(get.non.null(message$sender_id[1]),

get.non.null(message$name[1]),

get.non.null(message$text[1]))

# print(message.vector)

message.df <- rbind(message.df,message.vector)

}

last.message.id <- message.df$id[length(message.df$id)]

#query again starting at the last message

query.response <- GET(connection.url,

query = list(

token = groupme.api.key,

before_id = last.message.id

))

}

#success if 304, otherwise exited on failed query

print(paste("exited with code:", query.response$status_code))summary(message.df)Make a Function

Combine everything into a function so we can repeat for every group.

get.messages <- function(group_id){

#Connect to API for messages in the group

connection.url <- paste0("https://api.groupme.com/v3/groups/",

group_id,

"/messages")

#Define what we want out of a group message

message.df <- data.frame("group_id" = c(NA),

"message_id" = c(NA),

"sender_id"= c(NA),

"name" = c(NA),

"text" = c(NA),

stringsAsFactors = FALSE)

#Query first 100 messages of a group

query.response <- GET(connection.url,

query = list(

token = groupme.api.key,

limit = 100

))

#Continue querying for messages until we receive an API error

while(query.response$status_code == 200){

parsed.data <- content(query.response, "parsed")

#Parse message fields

messages <- lapply(parsed.data$response$messages,

function(x){x[c("id",

"sender_id",

"name",

"text")]})

#Convert into a dataframe

for(message in messages){

message.vector <- c(group_id,

get.non.null(message$id[1]),

get.non.null(message$sender_id[1]),

get.non.null(message$name[1]),

get.non.null(message$text[1]))

# print(message.vector)

message.df <- rbind(message.df,message.vector)

}

#Get latest message ID

last.message.id <- message.df$message_id[length(message.df$message_id)]

#Query for more messages

query.response <- GET(connection.url,

query = list(

token = groupme.api.key,

limit = 100,

before_id = last.message.id

))

}

#success if 304, otherwise failed

print(paste("exited with code:", query.response$status_code))

return(message.df)

}It’s time to actually retrieve all the data we need from GroupMe. I’m both combining all the data and saving each group to its own file.

#Create our final dataframe

all.messages.df <- data.frame("group_id"= c(NA),

"message_id" = c(NA),

"sender_id"= c(NA),

"name" = c(NA),

"text" = c(NA),

stringsAsFactors = FALSE)

#Run our function on every group

for(id in group.ids){

print(paste("group", id))

#Check how long it takes to get all messages

time <- system.time(messages.df <- get.messages(id))

print(time)

#save as individual rds file

saveRDS(messages.df,

file = paste0("../data/group_messages/",id,".rds"))

all.messages.df <- rbind(all.messages.df, messages.df)

}

#save final dataframe

saveRDS(all.messages.df, file = "../data/all_messages.rds")summary(all.messages.df)## group_id message_id sender_id

## Length:5376 Length:5376 Length:5376

## Class :character Class :character Class :character

## Mode :character Mode :character Mode :character

## name text

## Length:5376 Length:5376

## Class :character Class :character

## Mode :character Mode :characterStep 2: Create a Word Cloud

Once all the message data has been collected, getting it into a format suitable for a word cloud is pretty simple with tidytext and wordcloud2.

The most common word cloud is built from word frequency, where the largest words are those that occur the most.

First, we’ll need to filter out the messages we don’t care about. We only want my messages, so I need to find my ID. An easy way to find it is with string matching.

Save your sender ID for filtering.

sender.id <- "myid"Be sure to filter messages with certain words or phrases early, because it can be harder to filter once they are split into words. For GroupMe, I’m filtering out any media messages or links, which show as plain text through the API.

user.messages <- all.messages.df %>%

filter(sender_id == sender.id )%>%

filter(!str_detect(text, ".com"))library(wordcloud2)

words <- user.messages %>%

unnest_tokens(word, text) %>%

anti_join(stop_words) %>%

count(word, sort = TRUE) %>%

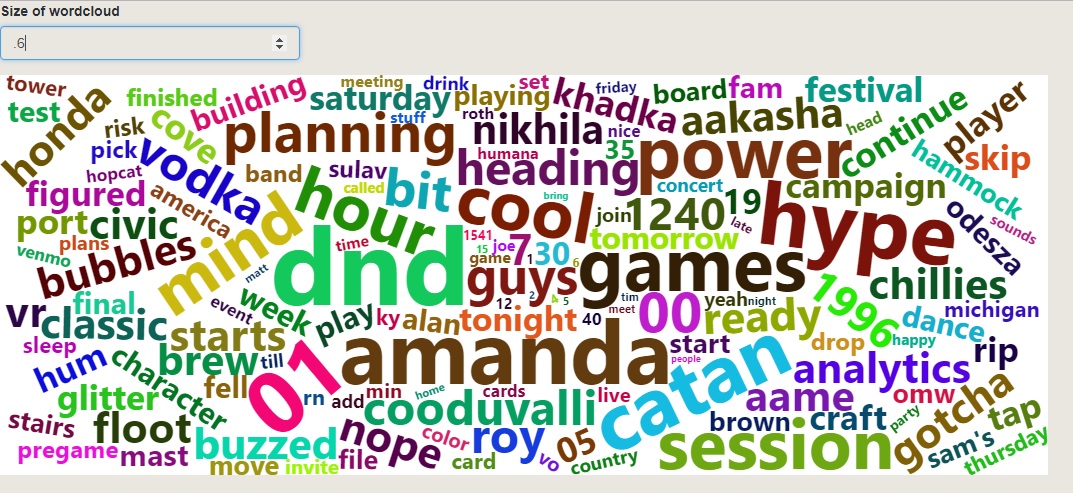

rename(count = n)## Joining, by = "word"wordcloud2(words, shape="square")You can tell by my word cloud that I like to play Settlers of Catan and D&D with my friends.

Most Relevant Words

For this gift I wanted to stray from word frequency. Since we have a group of many people messaging, I can compare the words my giftee used with what everyone else said using Term Frequency, Inverse Document Frequency (TF-IDF).

This time we don’t start by filtering a single person’s messages, instead only removing bad messages such as links containing “.com”.

filtered.messages <- all.messages.df %>%

filter(!str_detect(text, ".com"))TF-IDF is created on all messages, and grouped by each person with the final function bind_tf_idf(word, sender_id, n).

group.words <- filtered.messages %>%

unnest_tokens(word, text) %>%

anti_join(stop_words) %>%

count(word, sender_id, sort = TRUE)## Joining, by = "word"group.words.tfidf <- group.words %>%

bind_tf_idf(word, sender_id, n)With wordcloud2 we can easily use the tf_idf numbers in place of word frequency. The package automatically determines how to size the words and doesn’t require whole numbers. We also won’t need to filter stop words with tf_idf, although you still can if you want to. TF-IDF adjusts for usage across everyone. Stop words, which everyone uses in the group, will have lower values. This is particularly helpful for me as my name (Will), is a stop word. We only need to filter a single person at this final step and remake the word cloud.

my.tfidf <- group.words.tfidf %>%

filter(sender_id == sender.id)

wordcloud.df <- my.tfidf %>%

select(word, tf_idf)

wordcloud2(wordcloud.df)Step 3: Download the Word Cloud

This step is a little tricky and you may want to just look into using the package wordcloud instead of wordcloud2. The wordcloud package will create a jpeg, which is easy to export, while wordcloud2 is made in HTML and is harder to get an image. However, I like how Wordcloud2 looks better, and it allows you to create word clouds in shapes or letters so I used a couple workarounds.

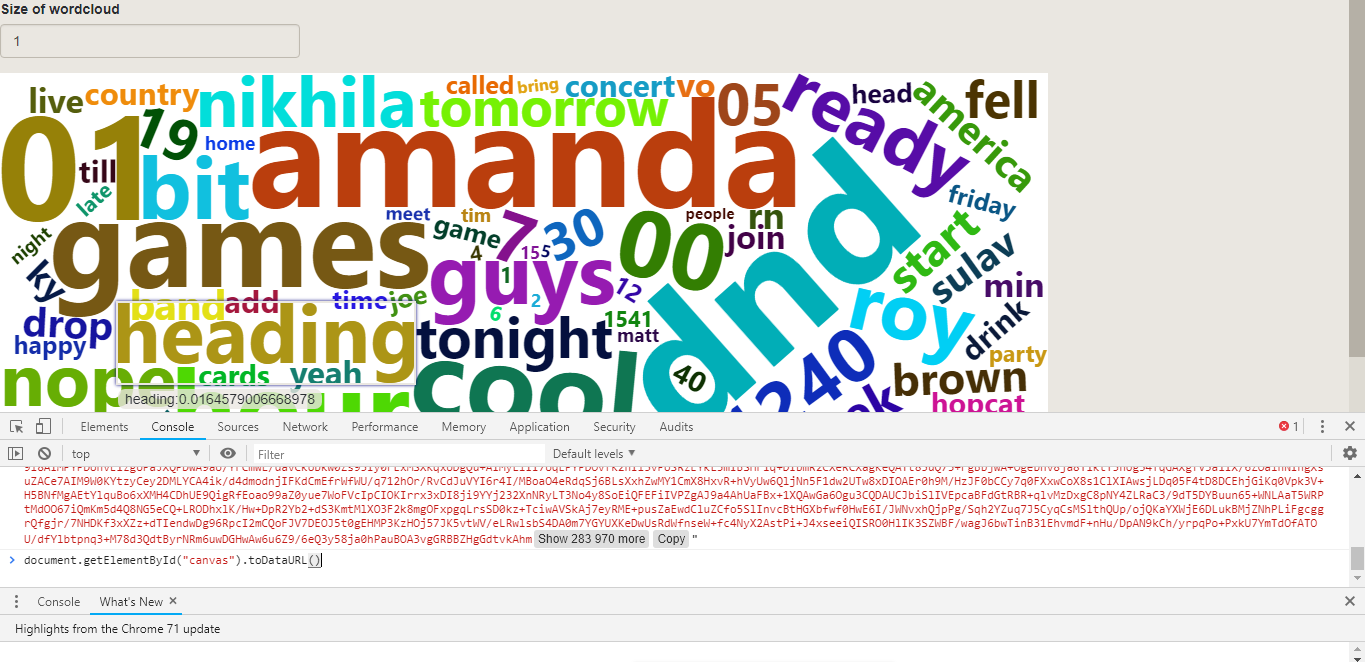

From HTML, there is a simple JavaScript trick that allows you to download as an image.

document.getElementById("canvas").toDataURL()This will get a link to an image you can download. However, this command seems to crash rstudio’s viewer. To get around that, I made a Shiny app with the same word cloud code. Having a Shiny app will allow you to view in Chrome as well as edit the size of the word cloud canvas easily. I also added an input to control the size of words, which allows you to quickly experiment and get the word cloud that you like. Below is the full Shiny app code.

# to save run following in js

#document.getElementById("canvas").toDataURL()

library(shiny)

library(wordcloud2)

library(tidyverse)

library(tidytext)

library(stringr)

#Define id and size of words

n <- 1

sender.id <- "myid"

#Get message data

all.message.dfs <- readRDS("../all_messages.rds")

#Filter out links

filtered.messages <- all.message.dfs %>%

filter(!str_detect(text, ".com"))

#Get word counts

all.words <- filtered.messages %>%

unnest_tokens(word, text) %>%

anti_join(stop_words) %>%

count(word, sender_id, sort = TRUE)

#Create TFIDF

all.words.tfidf <- all.words %>% bind_tf_idf(word, sender_id, n)

#Filter TFIDF

user.tfidf <- all.words.tfidf %>%

filter(sender_id == sender.id)

#format for wordcloud

wordcloud.text <- user.tfidf %>%

filter(!is.na(word)) %>%

select(word, tf_idf)

# Size the wordcloud

ui <- bootstrapPage(

numericInput('size', 'Size of wordcloud', n),

wordcloud2Output('wordcloud2', width = "1048px", height = "400px")

)

# Define server logic required to draw the wordcloud

server <- function(input, output) {

output$wordcloud2 <- renderWordcloud2({

wordcloud2(wordcloud.text, size=input$size)

})

}

# Run the application

shinyApp(ui = ui, server = server)This blog is a static website so I can’t embed the Shiny app, but here is what it should look like once you reference your own message data.

Now all you have to do is inspect the page in your web browser and write the command to get a URL in the JavaScript console tab.

document.getElementById("canvas").toDataURL()

Finally, we got a word cloud image with R. The Shiny page is a bit of an annoying workaround and I’ve been having issues with wordcloud2 correctly doing image masking. R might not be the best tool to get a word cloud after getting word frequency or TF-IDF, but it is doable.

I used Zazzle to create my mug. Zazzle was able to infer that all white space was supposed to be transparent and the resulting product looks pretty great.

Have fun making your word cloud merchandise!